This $5 Billion Insurance Company Likes To Talk Up Its AI. Now It Is In A Mess Over It

This $5 billion insurance company likes to talk up its AI. Now it's in a mess over it

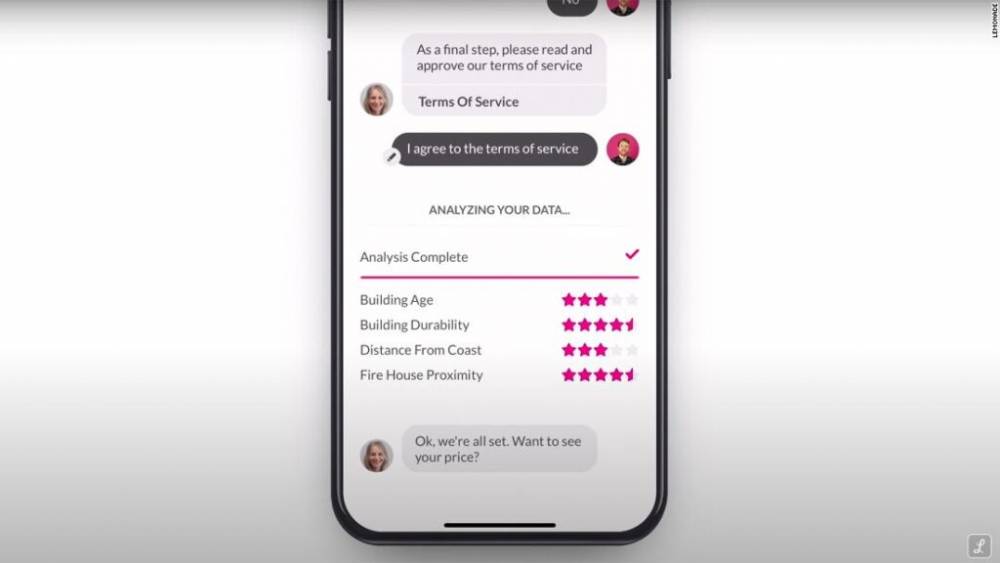

A critical component of insurance company Lemonade's pitch to investors and customers is its ability to use artificial intelligence to disrupt the traditionally staid insurance industry. It promotes friendly chatbots such as AI Maya and AI Jim, which assist customers in enrolling in policies such as homeowners' or pet health insurance and filing claims via Lemonade's app. And it has raised hundreds of millions of dollars from public and private market investors, largely by positioning itself as an artificial intelligence-powered tool.

Yet less than a year after going public, the company, now valued at $5 billion, finds itself embroiled in a public relations controversy over the technology that powers its services.

Lemonade explained on Twitter and in a blog post on Wednesday why it deleted what it described as a "awful thread" of tweets it posted on Monday. Those now-deleted tweets stated, among other things, that the company's artificial intelligence analyzes videos submitted by users when filing insurance claims for signs of fraud, picking up on "non-verbal cues that traditional insurers cannot pick up on because they do not use a digital claims process."

The deleted tweets sparked outrage on Twitter and can still be viewed via the Internet Archive's Wayback Machine. Some Twitter users expressed concern about what they perceived to be a "dystopian" use of technology, as the company's posts implied that its customers' insurance claims could be vetted by AI based on unexplained factors detected in their video recordings. Others scoffed at the company's tweets, calling them "nonsense."

"As an educator who collects examples of AI snake oil in order to educate students about all the harmful technology out there, I want to express my gratitude for your outstanding service," Arvind Narayanan, an associate professor of computer science at Princeton University, tweeted Tuesday in response to Lemonade's tweet about "non-verbal cues."

Confusion over how the company processes insurance claims caused "a spread of falsehoods and incorrect assumptions," Lemonade wrote in a blog post Wednesday. "We're writing this to clarify and unequivocally confirm that our users are not treated differently based on their appearance, behavior, or any personal/physical characteristic."

Lemonade's initially muddled messaging, as well as the public response, serve as a cautionary tale for the growing number of companies marketing themselves with AI buzzwords. Additionally, it emphasizes the difficulties posed by technology: While AI can be a selling point, for example by expediting a typically tedious process such as applying for insurance or filing a claim, it is also a black box. It is not always clear why, how, or even when it is being used to make a decision.

Lemonade stated in a blog post that the term "non-verbal cues" was a "bad choice of words" in its now-deleted tweets. Rather than that, it stated that it was referring to its use of facial recognition technology, which it uses to flag insurance claims submitted by a single person using multiple identities — flagged claims are then routed to human reviewers, the company noted.

The explanation is similar to what Lemonade described in a blog post in January 2020, in which the company explained how its claims chatbot, AI Jim, flagged attempts by a man to file fraudulent claims using multiple accounts and disguises. While the company did not specify whether it used facial recognition technology in those instances, Lemonade spokeswoman Yael Wissner-Levy confirmed to CNN Business this week that it did.

Though it is becoming more prevalent, facial recognition technology is contentious. When it comes to identifying people of color, the technology has been shown to be less accurate. At least several Black men have been wrongfully arrested as a result of false facial recognition matches.

Lemonade tweeted on Wednesday that it does not use or intend to develop artificial intelligence that "utilizes physical or personal features to deny claims (phrenology/physiognomy)," and that it does not evaluate claims based on a person's background, gender, or physical characteristics. Additionally, Lemonade stated that it never allows AI to automatically deny claims.

However, in Lemonade's initial public offering paperwork, which was filed with the Securities and Exchange Commission in June, the company stated that AI Jim "handles the entire claim through resolution in approximately a third of cases, paying the claimant or declining the claim without human intervention."

Wissner-Levy told CNN Business that AI Jim is a "branded term" that the company uses to refer to its claims automation, and that not everything AI Jim does is based on artificial intelligence. While AI Jim makes use of technology in some instances, such as detecting fraud using facial recognition software, it also makes use of "simple automation" — essentially, preset rules — in others, such as determining whether a customer has an active insurance policy or whether the amount of their claim is less than their insurance deductible.

In Conclusion

"It's a well-known fact that we automate claim processing. However, as stated in the blog post, the decline and approve actions are not carried out by AI "'She stated.

When asked how customers are supposed to distinguish between AI and simple automation when both are performed by a product named AI Jim, Wissner-Levy stated that while AI Jim is the name of the chatbot, the company will "never allow AI, in the sense of our artificial intelligence, to determine whether to auto reject a claim."

"We will allow AI Jim, the chatbot with whom you are currently conversing, to reject that based on rules," she added.

When asked if the branding of AI Jim is confusing, Wissner-Levy responded, "I suppose it is in this context." She stated that this is the first time the company has received complaints about the name being confusing or inconvenient to customers.

Courses and Certification

Python Deep Learning Course and Certificate